|

The image below shows the final working prototype. On the left is the hard-foam housing that kept the vibrating motors rigidly mounted, while allowing them to vibrate freely. The right image shows the tactile display's size relative to the hand. The vibrating motors rest 2mm above the surface to improve sensation. |

|

|

I interviewed Rita from the Danish blind community. She was very comfortable with technology and had a PDA to manager her life and read e-books, but mobility was her greatest concern. She lived near a park she never visited for fear of getting lost, and busy intersections posed a major daily challenge. |

|

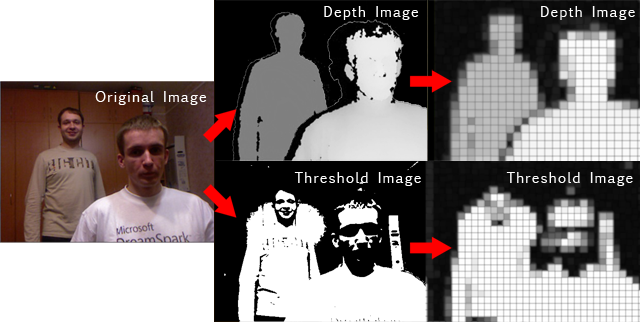

While sonar devices are commercially available today, they are limited to sensing in one dimension. By contrast a 2D grid can convey shape, scale, and motion to better inform users. |

|

Finally, other experimental systems for the blind use color video, however the Kinect's depth video offers significantly better image quality for this application. Additionally the intensity of a tactile display can change with depth, so the vibration for closer objects can be stronger while distant objects can be weaker. |